An Artificial Neural Network (ANN) is a computational model inspired by the structure and functioning of biological neural networks in the brain. It consists of interconnected nodes, known as neurons, organized in layers. Each neuron receives inputs, processes them, and generates an output signal.

The basic building block of an ANN is the perceptron, which mimics the functionality of a biological neuron. The perceptron takes multiple inputs, multiplies each input by a weight, sums them up, and then applies an activation function to produce an output.

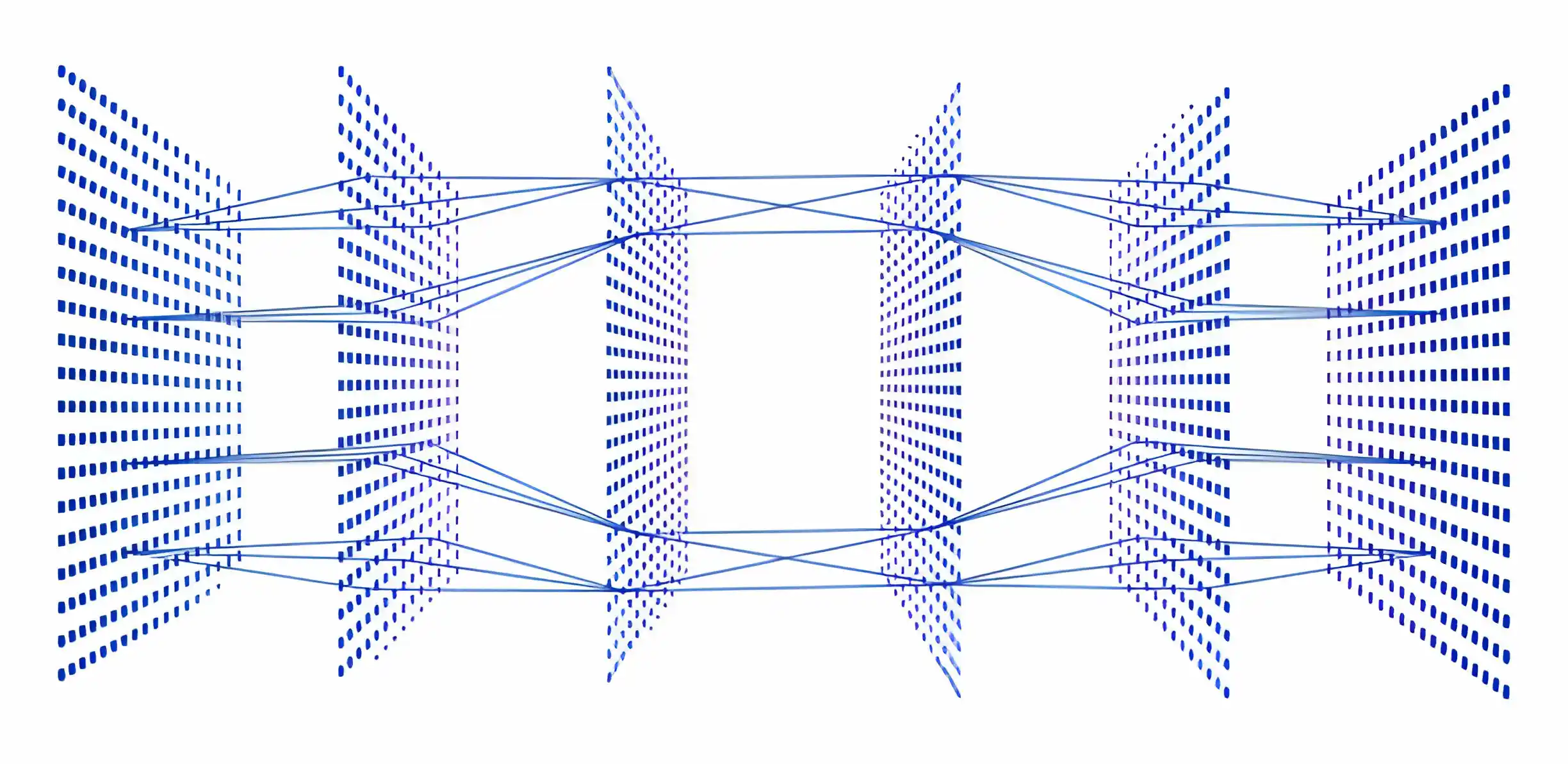

Three types of layers of ANN:

- Input Layer: This layer receives input data and passes it on to the next layer. Each neuron in this layer represents an input feature.

- Hidden Layers: Hidden Layers process the inputs received from the previous layer and pass the output to the next layer. Deep neural networks have multiple hidden layers, hence the term “deep learning.”

- Output Layer: Output Layer depends on the type of problem being solved. For example, in a binary classification problem, there might be one neuron in the output layer producing either a 0 or a 1.

Training an ANN involves adjusting the weights of connections between neurons to minimize the difference between the actual output and the desired output for a given set of inputs. This is typically done using optimization algorithms such as gradient descent and backpropagation, where the network learns from labeled training data by iteratively adjusting its parameters.

ANNs have found applications in various fields such as image and speech recognition, natural language processing, medical diagnosis, finance, and many others. They are particularly powerful for tasks involving pattern recognition, classification, regression, and function approximation. However, they can be computationally intensive and require large amounts of data for training, especially for deep neural networks.