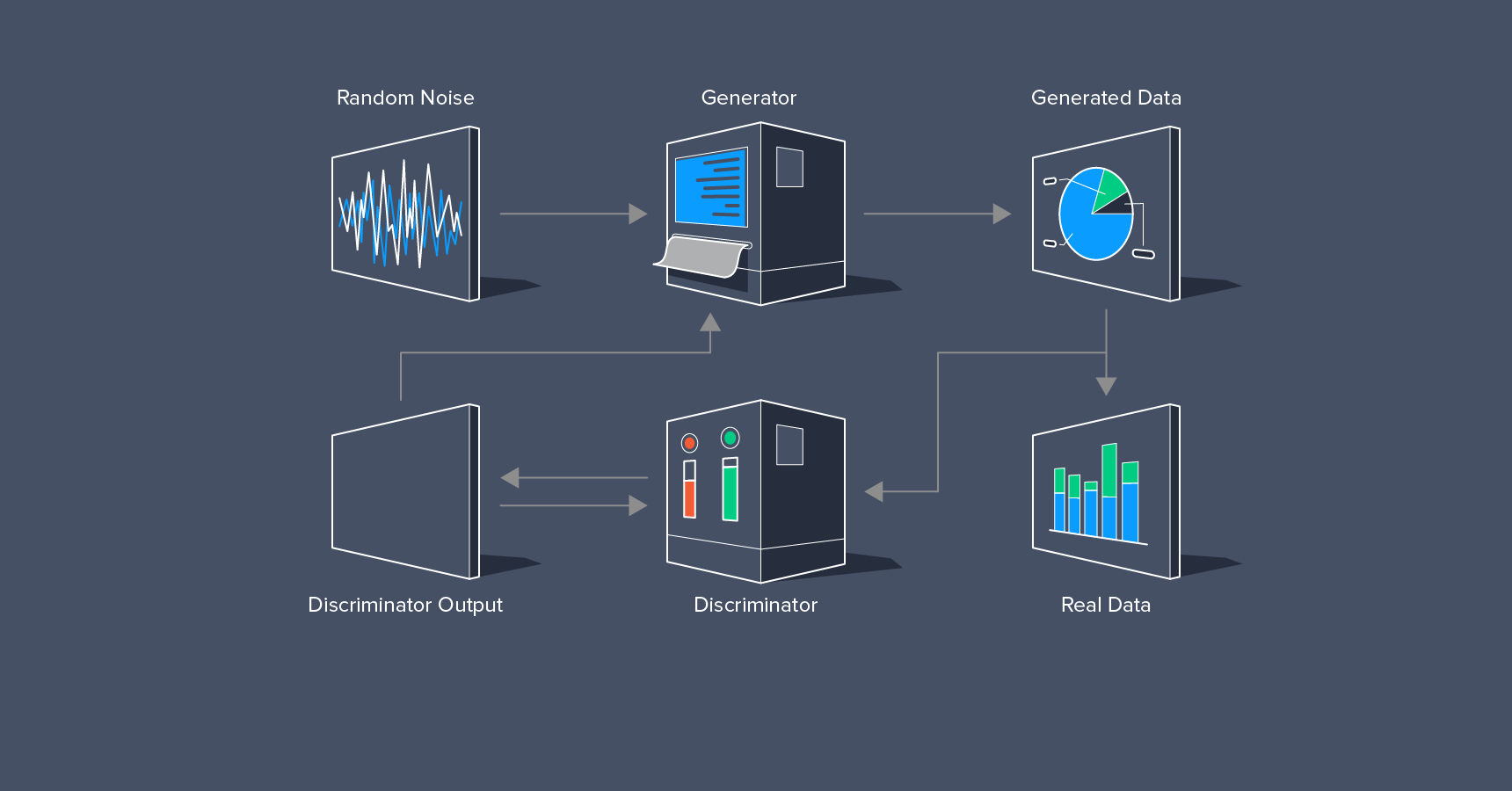

Generative Adversarial Networks (GANs) are a class of machine learning frameworks designed by Ian Goodfellow and his colleagues in 2014. GANs consist of two neural networks, the generator and the discriminator, that contest with each other in a game-like scenario. Here’s a brief overview of how they work:

- Generator: This network generates new data instances. It takes random noise as input and produces data samples that mimic the real data. The goal of the generator is to create data that is indistinguishable from the real data.

- Discriminator: This network evaluates data and determines whether a given instance is real (from the training data) or fake (generated by the generator). The goal of the discriminator is to correctly classify real and fake data instances.

The training process is a zero-sum game:

- The generator tries to produce data that the discriminator cannot distinguish from real data.

- The discriminator tries to get better at identifying real vs. fake data.

Over time, both networks improve. The generator becomes better at creating realistic data, while the discriminator becomes more skilled at identifying fakes. The training process continues until the generator produces data that is so realistic that the discriminator can no longer distinguish it from real data.

Applications of GANs

GANs have a wide range of applications, including:

- Image Generation: Creating realistic images, art, and designs.

- Data Augmentation: Generating additional training data for machine learning models.

- Image Super-Resolution: Enhancing the resolution of images.

- Text-to-Image Translation: Generating images from textual descriptions.

- Style Transfer: Applying the style of one image to the content of another.

- Video Generation: Creating realistic video sequences.

- 3D Object Generation: Designing 3D models for use in various industries.

Challenges in Training GANs

Training GANs can be challenging due to issues such as:

- Mode Collapse: The generator produces a limited variety of samples.

- Non-Convergence: The networks do not reach a stable equilibrium.

- Vanishing Gradients: Gradients may become too small for effective training.

- Oscillations: The networks may not stabilize, leading to oscillations in training.

Despite these challenges, GANs are a powerful tool in the field of generative modeling and have led to significant advancements in artificial intelligence and machine learning.